Scene Prep Aigent

As part of our journey into building agentic brands, I take you inside aigencia's brand new Scene Prep Aigent that is a part of the AdMaker aigents we're building.

This is the crucial first step in our AI-powered advertising ecosystem – transforming raw video content into structured, semantic data that our ad-making agents can use to automatically create compelling ads.

"Raw video is like crude oil – valuable, but not immediately usable. Our Scene Prep agent is the refinery that transforms it into high-octane advertising fuel."

The Problem: Raw Video Is Unusable for AI

After building our influencer marketing platform, Collabs.io, we quickly realized the next frontier: helping brands repurpose the massive volume of content being created into effective ads.

But we faced a significant challenge. Raw video files are essentially black boxes to AI:

AI can't "watch" videos directly

Videos lack semantic understanding of what's happening in each scene

Content isn't broken down into reusable segments

There's no searchable index of visual elements, themes, or advertising potential

To solve this, we needed a way to transform raw video into a structured format that AI could understand, analyze, and manipulate – essentially making video content "agentic-ready."

Scene Processing Aigent Process

How It Works: The Scene Prep Aigent Pipeline

The Scene Prep agent is actually a multi-stage AI pipeline that transforms raw video into a rich, semantically understood database of advertising assets. Let's break down the five critical processing stages:

1. Scene Detection: Finding Natural Break Points

The Challenge: Videos contain multiple shots and scenes, but these transitions are invisible to AI.

The first job of our Scene Prep agent is to analyze the entire video and detect natural scene changes – those moments where the camera cuts from one shot to another, or when significant visual changes occur.

Our scene detection uses adaptive thresholds to identify optimal scene breaks. Rather than using a fixed sensitivity, it intelligently adjusts to find the best 3-12 scenes for advertising purposes.

What's fascinating about this stage is that it's adaptive – the agent doesn't just use a one-size-fits-all approach. Instead, it tries multiple threshold settings to find the optimal number of scenes for advertising purposes. Too few scenes and we lose flexibility; too many and we fragment the narrative. The agent intelligently balances these factors without human intervention.

Adaptive Scene Detection Process

2. Scene Slicing: Creating Independent Video Segments

The Challenge: Even after identifying scene boundaries, extracting clean, usable video segments is complex.

Once we've identified scene boundaries, the next step is to extract each scene as an individual video clip. This is critical for two reasons:

It allows our AI to manipulate scenes as independent units

It creates reusable assets that can be combined in different ways

Why Scene Independence Matters

Think of scenes like LEGO blocks. Each block is valuable on its own, but the real power comes from being able to combine them in countless different ways to build new structures. Scene slicing creates these reusable building blocks for your ad content.

Behind the scenes, this process is surprisingly complex. We had to ensure perfect frame alignment, maintain quality levels suitable for professional advertising, and handle edge cases like very short scenes or unusual video codecs. Each scene is stored as a standalone high-quality video file that can be accessed directly via S3 URLs.

3. Frame Extraction: Creating Visual Snapshots

The Challenge: Video is temporal (exists across time), but AI vision models work best with static images.

Video is inherently temporal – it exists across time. But for AI analysis, we need to transform it into a set of static visual representations. That's where frame extraction comes in.

We don't just take random frames. Our agent extracts 5-10 frames at mathematically calculated intervals throughout each scene to ensure we capture the complete visual narrative.

This approach is more sophisticated than it might first appear. Rather than taking random frames, we extract 5-10 frames at evenly distributed time points throughout each scene, carefully calibrated to the scene's duration. This ensures we capture the full narrative arc of each scene, even if it contains motion or transitions.

Frame Extraction Strategy

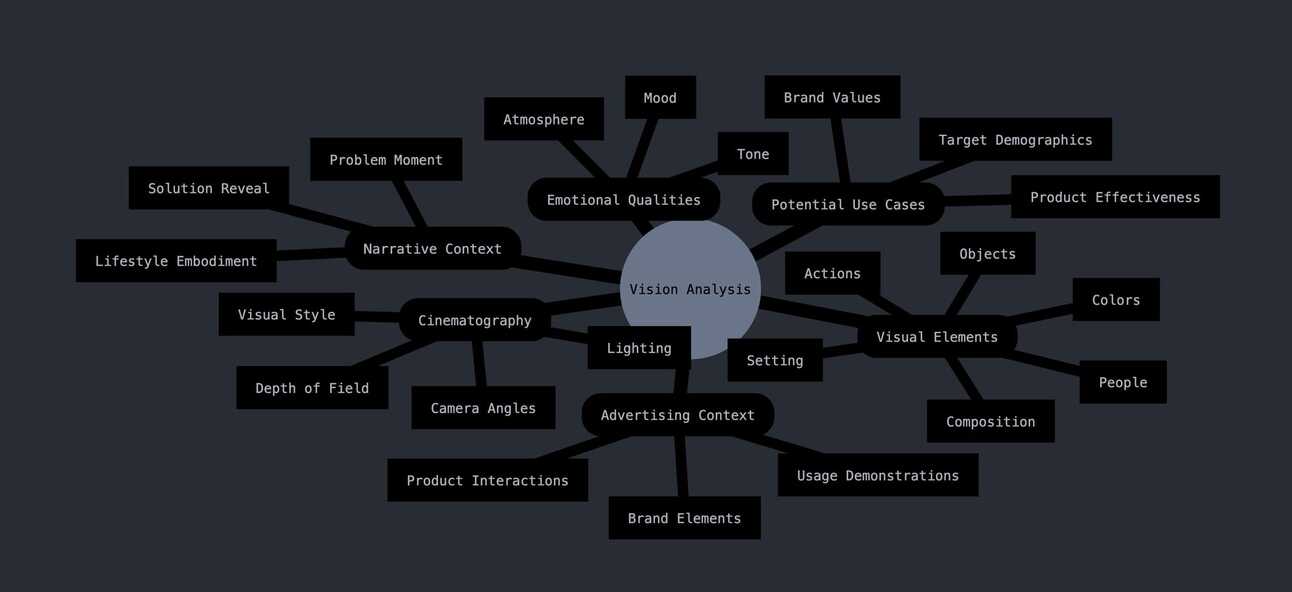

4. AI Vision Analysis: Understanding Visual Content

The Challenge: Translating pixels into meaningful advertising insights requires sophisticated AI vision models.

This is where the magic truly happens. We send those static frames to an AI vision model that can actually "see" and understand what's in the content.

"Training AI to think like an advertising creative was the breakthrough moment. It doesn't just see 'a person drinking water' – it sees 'a moment of refreshment demonstrating product satisfaction with natural lighting that conveys authenticity.'"

The analysis prompt is carefully engineered to extract advertising-specific insights – not just what's in the scene, but how it might be used in different advertising contexts. We're teaching the AI to "think like an ad creative" by focusing on emotional qualities, narrative context, and potential use cases.

The vision model processes multiple frames from each scene and generates a detailed description along with advertising-specific highlights. This is the crucial step that transforms pixels into semantic meaning – the AI is actually understanding what's happening in each scene and why it might be valuable.

Example Scene Analysis Output:

Scene Description: A young woman in athletic wear smiles while using a water bottle after exercise in a sunlit park. The close-up shot captures genuine satisfaction, with natural lighting creating a warm, authentic atmosphere. The subject's direct engagement with the product demonstrates ease of use and immediate refreshment, while the outdoor setting associates the product with an active, healthy lifestyle.

Highlights: • Authentic product satisfaction moment showing immediate benefit • Natural lighting creating positive, aspirational mood • Active lifestyle association strengthening brand positioning • Clear product demonstration with focused framing

Vision Analysis Dimensions

5. Vectorization: Making Content Semantically Searchable

The Challenge: Converting rich visual analysis into a format that enables semantic search and AI understanding.

The final transformation is perhaps the most powerful. We convert all the rich textual analysis into mathematical vector embeddings – essentially translating the meaning of each scene into a format that allows for semantic search and matching.

Each scene is represented by a 1,024-dimensional vector that captures its semantic meaning, along with comprehensive metadata about timestamps, URLs, and technical details.

This is the same vectorization concept I've discussed in my previous articles, but applied to video content. We use Pinecone's vector database to store not just the embeddings, but also all the rich metadata about each scene – from timestamps to thumbnail URLs to descriptions.

The result is transformative: video content that was previously opaque to AI is now semantically searchable and understandable. Our ad-making agents can now query this database with requests like "find me scenes that show product satisfaction" or "I need closeup shots of people using the product outdoors" – and get meaningful, relevant results.

Vectorization and Semantic Database

The End of Manual Video Tagging

One of the most time-consuming aspects of traditional video management is manual tagging – the painstaking process where humans watch videos and assign keywords, categories, and descriptors to make content searchable.

The Scene Prep Aigent completely eliminates this burdensome task through its AI vision analysis capabilities:

AI Vision vs Manual Tagging

Limitations of Manual Tagging

Traditional tagging suffers from severe limitations:

Subjective and Inconsistent: Different team members tag the same content differently

Limited Vocabulary: Most tagging systems use just 15-20 tags per video

Surface-Level Only: Tags rarely capture emotional qualities, cinematography details, or advertising potential

Time-Intensive: A 2-minute video might take 10-15 minutes to properly tag

No Semantic Understanding: Tags are just keywords without any understanding of meaning or context

How AI Vision Analysis Transforms the Process

The Scene Prep agent's vision analysis offers profound advantages:

Comprehensive Description: Instead of a handful of tags, each scene gets a detailed paragraph describing all visual elements

Emotional Intelligence: The AI identifies mood, tone, and emotional qualities that tags miss

Advertising Context: Scenes are analyzed specifically for their advertising potential and use cases

Objective Consistency: Every video is processed with the same thorough analytical framework

Semantic Understanding: The AI doesn't just label what it sees – it understands the meaning and relationships

Perhaps most importantly, this process happens automatically, without human intervention. What once took a marketing team days or weeks now happens in minutes or hours, with far more sophisticated results.

The Hidden Cost of Manual Tagging

A mid-sized brand with 500 videos in their library might spend 150-200 hours annually on manual tagging – that's nearly a month of full-time work. Despite this investment, studies show that up to 70% of tagged content is never discovered or reused because of poor tagging schemas and search limitations.

This isn't just a time-saving feature – it's a fundamental shift in how brands understand and extract value from their video content. By removing the manual tagging bottleneck, you're not only saving resources but also unlocking far richer insights about your content than human tagging could ever provide.

The Magic Behind the Scenes: Agent Orchestration

What makes this whole system truly powerful is the orchestration layer. Each of these five processing stages is handled by a specialized module, all coordinated through an event-driven architecture.

"The real innovation isn't just the AI components – it's how they work together. Like a symphony orchestra, each specialized instrument plays its part at exactly the right moment to create something greater than the sum of its parts."

The entire pipeline is asynchronous and resilient – if one stage encounters an issue, it can be retried without losing the work done in previous steps. Each video is processed through the complete pipeline, generating streaming updates along the way so brands can monitor progress in real-time.

Scene Prep Aigent Orchestration

Why Brands Must Own Their Digital Assets

When we built the Scene Prep Aigent, we made a critical architectural decision: all processed assets and vector data would be stored in the brand's own cloud infrastructure – specifically, their S3 buckets and Pinecone vector database instances.

This wasn't just a technical choice; it was a strategic one that directly impacts your brand's future in the agentic economy.

"In the agentic future, the brands that own and control their own semantic data will have an insurmountable advantage over those who've surrendered it to third-party platforms."

The SaaS Trap: Why Traditional Solutions Fall Short

Most brands today rely on a fragmented collection of SaaS tools to manage their digital assets:

A digital asset management (DAM) system to store videos and images

A content management system (CMS) to publish and organize content

Analytics platforms to measure performance

Editing tools to modify and repurpose content

Each of these systems creates a data silo, and none of them prepares your content for the agentic future. Even worse, many of these platforms claim ownership or usage rights to the semantic understanding they generate from your content.

The Strategic Advantages of Asset Ownership

When you own your digital assets and their semantic representations in your own infrastructure, you gain several critical advantages:

1. Data Sovereignty

Your content – and the valuable semantic understanding derived from it – remains completely under your control. You're not renting access to your own intellectual property from a third party.

2. Agent Accessibility

In the coming agentic economy, AI agents will need direct, permissioned access to your brand's semantic representations. When these live in your own infrastructure, you control exactly how, when, and which agents can access your content.

3. Future-Proofing

Most SaaS platforms are built on legacy architectures that weren't designed for the agentic future. By establishing your vector database now, you're building on the architecture that will power the next decade of marketing.

4. Cost Efficiency

While the initial setup requires investment, owning your infrastructure eliminates ongoing SaaS fees that typically increase as your content library grows. The more content you create, the more financially advantageous ownership becomes.

5. Competitive Advantage

Brands that build these semantic libraries now will have years of accumulated advantage over competitors who delay. The richness and depth of your vector database directly impacts what your agents can accomplish.

The Hidden Value of Vector Libraries

Your vector database is rapidly becoming your brand's most valuable digital asset. Unlike raw videos that depreciate over time, properly vectorized content increases in value as your library grows and as agent technology advances.

Preparing for the Model Context Protocol (MCP) Future

The Scene Prep aigent isn't just creating a library of video assets – it's preparing your brand for seamless integration with the emerging Model Context Protocol (MCP) ecosystem.

For those unfamiliar, Anthropic's Model Context Protocol (MCP) is an emerging open standard that's revolutionizing how AI models and agents interact with data sources. Think of MCP as a "USB-C port for AI" – a universal connector that allows any AI system to plug into your brand's data ecosystem through standardized interfaces.

Why MCP Matters for Your Video Assets

When your processed video assets live in your own vector database, they're perfectly positioned to become a rich context source for MCP-compatible AI systems. This means:

Universal Access: Any MCP-compatible AI system will be able to understand and utilize your video asset database without custom integration work

Controlled Permissions: You define exactly what data is shared and with which systems through standardized authentication protocols

Future Compatibility: As new AI models and agents emerge, they'll be able to seamlessly tap into your existing video asset database

No Vendor Lock-in: Because MCP is an open protocol, you're not tied to any single AI provider or platform

"By vectorizing your video content now, you're not just solving today's advertising challenges – you're setting up your brand to thrive in the MCP-powered ecosystem of tomorrow."

This is why we've designed the Scene Prep agent to output data in formats that are already aligned with emerging MCP standards – so your investment in processing today's content won't become obsolete as AI systems evolve.

Practical Implications: Why This Matters for Brands

Now that we've looked under the hood, let's talk about why this technology matters to brand founders:

1. Automated Content Repurposing

The vast majority of brand content is used once and then forgotten. With Scene Prep, every piece of video content you create becomes a searchable, reusable asset in your advertising library.

2. Unprecedented Scale

A human video editor might be able to process a handful of videos per day. Our Scene Prep agent can process hundreds of videos concurrently, 24/7, creating a massive library of ready-to-use ad assets.

3. Semantic Understanding

Traditional video management systems use tags and keywords. Our system actually understands the content, mood, narrative context, and advertising potential of every scene.

4. Autonomous Ad Creation

This is the foundation for true autonomous advertising. In Part 2 of this series, I'll show how our AdMaker agent uses this semantically understood content to automatically generate targeted ads based on campaign goals.

Before vs. After Scene Prep

Before: "I have 20 product videos I need to turn into Instagram ads"

After: "I have a searchable library of 150+ scenes showing product benefits, lifestyle moments, and emotional reactions that my AI can instantly combine into unlimited ad variations."

Becoming an Agentic Brand

As I explained in my vectorization article, becoming an agentic brand requires making your content AI-accessible. The Scene Prep Aigent does exactly this for video content, which has traditionally been the most challenging media type for AI to work with.

By processing your video library through this pipeline, you're transforming static assets into a dynamic, AI-ready content repository that agents can work with. The brands that build these rich semantic content libraries now will have a massive advantage as we move further into the age of AI-driven marketing.

Agentic Brand Transformation

Moving from SaaS Dependency to Agent Autonomy

The transition from traditional SaaS marketing tools to an agent-ready infrastructure doesn't happen overnight. Here's a practical roadmap for brands:

Audit Your Content: Identify your most valuable video assets currently locked in SaaS platforms

Establish Your Infrastructure: Set up your S3 storage and Pinecone vector database

Process Existing Content: Use Scene Prep to transform your existing video library

Develop Agent Workflows: Create clear processes for how agents will access and utilize your content

Integrate With MCP Standards: Ensure your data is accessible through standardized protocols

"The brands that thrive in the agentic economy won't be the ones with the biggest marketing budgets, but those with the richest semantic understanding of their own content and the infrastructure to make it accessible to AI."

The winners in this new paradigm will be the brands that recognize that marketing is rapidly shifting from a human-centered activity to an agent-orchestrated one – and that the foundation of this shift is owning your data, not renting access to it through increasingly obsolete SaaS tools.

What's Coming in Part 2

This is just the beginning of our AdMaker agent ecosystem. Once we have this rich database of semantically understood video scenes, the real magic begins. In Part 2, I'll explain how our Ad Creation agent:

Takes a campaign brief and generates ad concepts

Searches the scene database for perfect matches to each concept

Assembles scenes into coherent narratives

Adds appropriate text overlays, music, and transitions

Produces finished ads without human intervention

We're witnessing the birth of truly autonomous advertising – a world where AI doesn't just assist human creatives, but actively generates high-quality advertising assets from your existing content.

"The brands that become fluent in agent-ready content now will dominate the advertising landscape of tomorrow. This isn't just an efficiency play – it's a fundamental reimagining of how advertising assets are created, managed, and deployed."

Stay tuned for Part 2, where I'll show you how to put these semantically-rich video assets to work.

Book a call with gentic to implement AI agents at your brand.